#Computer Ethics

Explore tagged Tumblr posts

Text

Don't Let Your Child Lie About Their Age in Games. Here's Why.

// if you have children check out this is interesting article about computer games, privacy and children.

#computer games#Cyber Security#computer ethics#techonology#computer#computers#computing#children and computer games

6 notes

·

View notes

Text

Our society's growing reliance on computer systems that were initially intended to "help" people make analyses and decisions, but which have long since both surpassed the understanding of their users and become indispensable to them, is a very serious development. It has two important consequences. First, decisions are made with the aid of, and sometimes entirely by, computers whose programs no one any longer knows explicitly or understands. Hence no one can know the criteria or the rules on which such decisions are based. Second, the systems of rules and criteria that are embodied in such computer systems become immune to change, because, in the absence of a detailed understanding of the inner workings of a computer system, any substantial modification of it is very likely to render the whole system inoperative and possibly unrestorable. Such computer systems can therefore only grow. And their growth and the increasing reliance placed on them is accompanied by an increasing legitimation of their "knowledge base." […] One would expect that large numbers of individuals, living in a society in which anonymous, hence irresponsible, forces formulate the large questions of the day and circumscribe the range of possible answers, would experience a kind of impotence and fall victim to a mindless rage. And surely we see that expectation fulfilled all around us, on university campuses and in factories, in homes and offices. Its manifestations are workers' sabotage of the products of their labor, unrest and aimlessness among students, street crime, escape into drug-induced dream worlds, and so on. Yet an alternative response is also very pervasive; as seen from one perspective, it appears to be resignation, but from another perspective it is what Erich Fromm long ago called "escape from freedom." The "good German" in Hitler's time could sleep more soundly because he "didn't know" about Dachau. He didn't know, he told us later, because the highly organized Nazi system kept him from knowing. (Curiously, though, I, as an adolescent in that same Germany, knew about Dachau. I thought I had reason to fear it.) Of course, the real reason the good German didn't know is that he never felt it to be his responsibility to ask what had happened to his Jewish neighbor whose apartment suddenly became available. The university professor whose dream of being promoted to the status of Ordinarius was suddenly fulfilled didn't ask how his precious chair had suddenly become vacant. Finally, all Germans became victims of what had befallen them. Today even the most highly placed managers represent themselves as innocent victims of a technology for which they accept no responsibility and which they do not even pretend to understand (One must wonder, though, why it never occurred to Admiral Moorer to ask what effect the millions of tons of bombs the computer said were being dropped on Viet Nam were having.) The American Secretary of State, Dr. Henry Kissinger, while explaining that he could hardly have known of the "White House horrors" revealed by the Watergate investigation, mourned over "the awfulness of events and the tragedy that has befallen so many people."

-- Joseph Weizenbaum, Computer Power and Human Reason (1976)

2 notes

·

View notes

Text

AI Barbie doll trend

If you have ever wondered what you would look like as a Barbie doll, now is your chance, in a new trend on social media at the moment.

I would also like to add here that the above picture is an interesting image of me as a Barbie doll generated by ChatGPT.

As I have said before, there seems to be an interesting trend on social media. Lately of people turning themselves into Barbie dolls, as highlighted by the BBC article below about the subject.

If you want to create your own Barbie doll image, please read the article below. The article below also highlights concerns about the trend. If, after reading the article, you have concerns, then don't do it; don't be pressured into doing something that you are uncomfortable doing.

ChatGPT AI action dolls: Concerns around the Barbie-like viral social trend

I would also like to add here that if you are a parent of children and teenagers under 18, please supervise your children and give your children and teenagers the appropriate guidance. Also, put in the appropriate safeguards in place. Read the articles below that highlight the risks and give you advice about AI if you are the parent of children and teenagers under 18.

The Children’s Commissioner’s view on artificial intelligence (AI) | Children's Commissioner for England

Teens are using AI a lot more than parents think

References

Artificial intelligence - Wikipedia

#ChatGPT#AI#art#ai generated#ai generated art#ethics#computer safety#computer ethics#artificial intelligence#computer#computers#Ethical

0 notes

Text

The Ten Commandments of Computer Ethics are so funny to me because not only are they called "commandments" but also because the wording is like this :

#i'm laughing yes but it also feels nice to see smh#ten commandments#computer ethics#computer science#women in stem#thou shalt not

5 notes

·

View notes

Text

AI Barbie doll trend

If you have ever wondered what you would look like as a Barbie doll, now is your chance, in a new trend on social media at the moment.

I would also like to add here that the above picture is an interesting image of me as a Barbie doll generated by ChatGPT.

As I have said before, there seems to be an interesting trend on social media. Lately of people turning themselves into Barbie dolls, as highlighted by the BBC article below about the subject.

If you want to create your own Barbie doll image, please read the article below. The article below also highlights concerns about the trend. If, after reading the article, you have concerns, then don't do it; don't be pressured into doing something that you are uncomfortable doing.

ChatGPT AI action dolls: Concerns around the Barbie-like viral social trend

I would also like to add here that if you are a parent of children and teenagers under 18, please supervise your children and give your children and teenagers the appropriate guidance. Also, put in the appropriate safeguards in place. Read the articles below that highlight the risks and give you advice about AI if you are the parent of children and teenagers under 18.

The Children’s Commissioner’s view on artificial intelligence (AI) | Children's Commissioner for England

Teens are using AI a lot more than parents think

References

Artificial intelligence - Wikipedia

#AI#artificial intelligence#Computer#Computers#technology#Computer ethics#AI generrated art#Ethics#Ethical

0 notes

Text

Let’s play a game…

Get your friends together and put your cell phones in the middle of the room. Make sure they’re on. Then, carry on a 30 minute discussion of things you never talk about. Here’s some ideas:

Vinyl records of artists

Cities in Europe

Luxury guitars

Having children, diapers, baby registries

Cyber security

Now, note what ads you get for the next week. If any of your friends don’t get their ads tainted, post their phone model here.

Have fun!

#cyber security#advertising#data security#data privacy#data analytics#privacymatters#internet privacy#online privacy#ethics in ai#ethical ai#ethics#computer ethics

1 note

·

View note

Text

I’m not even in STEM (I’m a History major), but I still chose to take Computer Ethics class over the first half of this Summer, and the idea that I now know more about computer ethics than the vast majority of people who actually work in the field (despite only having six weeks of study!) is very unsettling.

The AI issue is what happens when you raise generation after generation of people to not respect the arts. This is what happens when a person who wants to major in theatre, or English lit, or any other creative major gets the response, "And what are you going to do with that?" or "Good luck getting a job!"

You get tech bros who think it's easy. They don't know the blood, sweat, and tears that go into a creative endeavor because they were taught to completely disregard that kind of labor. They think they can just code it away.

That's (one of the reasons) why we're in this mess.

18K notes

·

View notes

Text

What I really appreciate about The Talos Principle 2 is that big chunks of its writing genuinely read like they were written by someone who's personally had to justify the discipline of philosophy to a STEM major. "There exists an implicit moral algorithm in the structure of the cosmos, but actually solving that algorithm to determine the correct course of action in any given circumstance a priori would require more computational power than exists in the universe. Thus, as we must when faced with any computationally intractable problem, we fall back on heuristic approaches; these heuristics are called 'ethics'." is a fascinating way of framing it, but then I ask why would you explain it like that, and every possible answer is hilarious.

#gaming#video games#the talos principle 2#the talos principle#philosophy#ethics#computer science#stem#the talos principle 2 spoilers#the talos principle spoilers#spoilers

2K notes

·

View notes

Text

This is a real thing that happened and got a google engineer fired in 2022.

–

We ask your questions so you don’t have to! Submit your questions to have them posted anonymously as polls.

#polls#incognito polls#anonymous#tumblr polls#tumblr users#questions#polls about the internet#submitted june 13#polls about ethics#ai#artificial intelligence#computers#robots

447 notes

·

View notes

Text

I archived the magnus archives

idk link to the full thing is here https://docs.google.com/spreadsheets/d/1vA6kin0H5bk_Nu15WTnwmAEPTAx9MLVO/edit?usp=sharing&ouid=117712544114786577413&rtpof=true&sd=true

I genuinely don't know what anyone would use this for, and honestly doing recreational paperwork is crazy and i need to sit with myself for a bit and think about this one

anyway john's case numbering system sucks so i made a new one, not really that good but it acts better as a call number system. In real life there would be albums and catalogue numbers but thank god the magnus institute archive does NOT exist so that's not an issue

#the magnus archives#tma#jonathan sims#martin blackwood#i literally did an unpaid shift as an archival assistant then came home and did this#autism overload#also dear god using smooth delicious Microsoft excel at work makes google sheets feel like one of those fake children's play toy-#-computers like what the hell why is office 365 so damn expensive i want nice cells i want capitalisation functions google sheets count you#damn days count your days thats what im trying to day#and don't tell me its an ethical issue for the statement givers last name to be in the call number#the magnus institute kills people i don't think they care

94 notes

·

View notes

Text

AI Barbie doll trend

If you have ever wondered what you would look like as a Barbie doll, now is your chance, in a new trend on social media at the moment.

I would also like to add here that the above picture is an interesting image of me as a Barbie doll generated by ChatGPT.

As I have said before, there seems to be an interesting trend on social media. Lately of people turning themselves into Barbie dolls, as highlighted by the BBC article below about the subject.

If you want to create your own Barbie doll image, please read the article below. The article below also highlights concerns about the trend. If, after reading the article, you have concerns, then don't do it; don't be pressured into doing something that you are uncomfortable doing.

ChatGPT AI action dolls: Concerns around the Barbie-like viral social trend

I would also like to add here that if you are a parent of children and teenagers under 18, please supervise your children and give your children and teenagers the appropriate guidance. Also, put in the appropriate safeguards in place. Read the articles below that highlight the risks and give you advice about AI if you are the parent of children and teenagers under 18.

The Children’s Commissioner’s view on artificial intelligence (AI) | Children's Commissioner for England

Teens are using AI a lot more than parents think

References

Artificial intelligence - Wikipedia

#AI#artificial intelligence#Computer#Computers#Computer ethics#technology#AI generated art#Ethical#Ethics

0 notes

Text

One day it will all be worth it <3

Nights like this can be so draining and tiring but posting stuff like this remotivates me to keep going. Struggling so hard to not be distracted but it will work out…

#academia#academic validation#academic weapon#history#philosophy#philanthropy#ethics#notes#study motivation#student life#studying#studyblr#studyspo#student#study blog#study aesthetic#study notes#my notes#life lessons#love#live#life#ethical lifestyle#good life#college life#kiss of life#hydration#owala#computer#trees

104 notes

·

View notes

Text

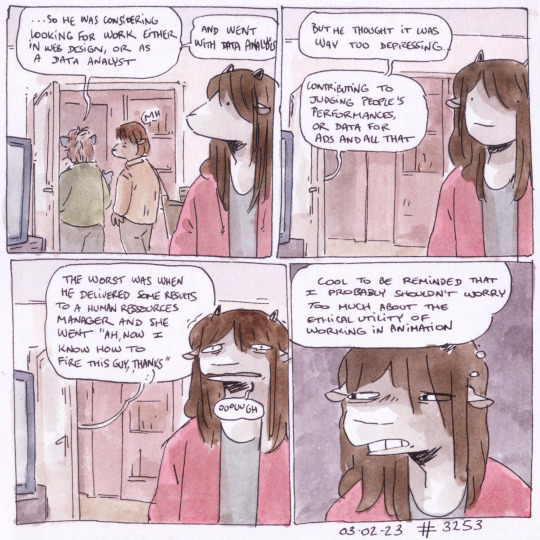

#03.02.23#3253#'ethical utility of working in animation' i think i wasthinking back to how when i was studying animation we had chats about like#isnt it wasteful to have a ton of computers running to render high detailed animation or whatever ;#that plus also does working in that field actually do any good i guess#unnecessary doubts because there's definitely definitely stuff a lot more harmful c'mon#ma

276 notes

·

View notes

Text

regardless of my or your opinion on AI art, saying that artists also make their art by simply viewing a lot of art and then regurgitating a mixture of statistically probable pixels implies that humans learn like machines do, and this isn't even remotely accurate. ignoring the phenomenological and social components of human learning really only stands to dovetail into viewing humans as material goods on the same level as a server farm. psychology has actually been sliding into viewing the brain as computers, and computers as equivalents to the human brain, and it's caused a lot of harm. we can't talk about some psychological phenomena without using Computer Terms to do it because that's the language that was given to them. viewing humans and computers as functionally equal with enough bits to replicate neurons won't humanize robots, it will dehumanize humans. it is advanced as an ideology for that purpose. in the end i see humans viewing art and integrating it into their own ideas for art as valuable because i think that humans don't exist to create a profit for some guy in silicon valley and i think that enriching human lives is good. humans don't "learn" art in order to produce replicas; the art is a byproduct.

#i'm trying to avoid the idea of a soul or whatever when trying to differentiate them#but the existence of consciousness often equivocated to 'having a soul' is significant and entirely different from machine learning#we have to define values and ethical systems that structure our society and i think that system of ethics should define conscious life#as valuable and that doesn't just extend to humans it's exactly like humanely dispatching animals is also an important ethical principle#i don't want to live in a society that sees a computer with the same amount of 'neurons' as a human brain as equally valuable to a person#at the end of the day.

41 notes

·

View notes

Text

watching alexander avilas new AI video and while:

I agree that we need more concrete data about the true amount of energy Generative AI uses as a lot of the data right now is fuzzy and utilities are using this fuzziness to their advantage to justify huge building of new data centers and energy infrastructure (I literally work in renewables lol so I see this at work every day)

I also agree that the copyright system sucks and that the lobbyist groups leveraging Generative AI as a scare tactic to strengthen it will probably ultimately be bad for artists.

I also also agree that trying to define consciousness or art in a concrete way specifically to exclude Generative AI art and writing will inevitably catch other artists or disabled people in its crossfire. (Whether I think the artists it would catch in the crossfire make good art is an entirely different subject haha)

I also also also agree that AI hype and fear mongering are both stupid and lump so many different aspects of growth in machine learning, neural network, and deep learning research together as to make "AI" a functionally useless term.

I don't agree with the idea that Generative AI should be a meaningful or driving part of any kind of societal shift. Or that it's even possible. The idea of a popular movement around this is so pie in the sky that it's actually sort of farcical to me. We've done this dance so many times before, what is at the base of these models is math and that math is determined by data, and we are so far from both an ethical/consent based way of extracting that data, but also from this data being in any way representative.

The problem with data science, as my data science professor said in university, is that it's 95% data cleaning and analyzing the potential gaps or biases in this data, but nobody wants to do data cleaning, because it's not very exciting or ego boosting, and the amount of human labor it would to do that on a scale that would train a generative AI LLM is frankly extremely implausible.

Beyond that, I think ascribing too much value to these tools is a huge mistake. If you want to train a model on your own art and have it use that data to generate new images or text, be my guest, but I just think that people on both sides fall into the trap of ascribing too much value to generative AI technologies just because they are novel.

Finally, just because we don't know the full scope of the energy use of these technologies and that it might be lower than we expected does not mean we get a free pass to continue to engage in immoderate energy use and data center building, which was already a problem before AI broke onto the scene.

(also, I think Avila is too enamoured with post-modernism and leans on it too much but I'm not academically inclined enough to justify this opinion eloquently)

17 notes

·

View notes

Text

"Major AI companies are racing to build superintelligent AI — for the benefit of you and me, they say. But did they ever pause to ask whether we actually want that?

Americans, by and large, don’t want it.

That’s the upshot of a new poll shared exclusively with Vox. The poll, commissioned by the think tank AI Policy Institute and conducted by YouGov, surveyed 1,118 Americans from across the age, gender, race, and political spectrums in early September. It reveals that 63 percent of voters say regulation should aim to actively prevent AI superintelligence.

Companies like OpenAI have made it clear that superintelligent AI — a system that is smarter than humans — is exactly what they’re trying to build. They call it artificial general intelligence (AGI) and they take it for granted that AGI should exist. “Our mission,” OpenAI’s website says, “is to ensure that artificial general intelligence benefits all of humanity.”

But there’s a deeply weird and seldom remarked upon fact here: It’s not at all obvious that we should want to create AGI — which, as OpenAI CEO Sam Altman will be the first to tell you, comes with major risks, including the risk that all of humanity gets wiped out. And yet a handful of CEOs have decided, on behalf of everyone else, that AGI should exist.

Now, the only thing that gets discussed in public debate is how to control a hypothetical superhuman intelligence — not whether we actually want it. A premise has been ceded here that arguably never should have been...

Building AGI is a deeply political move. Why aren’t we treating it that way?

...Americans have learned a thing or two from the past decade in tech, and especially from the disastrous consequences of social media. They increasingly distrust tech executives and the idea that tech progress is positive by default. And they’re questioning whether the potential benefits of AGI justify the potential costs of developing it. After all, CEOs like Altman readily proclaim that AGI may well usher in mass unemployment, break the economic system, and change the entire world order. That’s if it doesn’t render us all extinct.

In the new AI Policy Institute/YouGov poll, the "better us [to have and invent it] than China” argument was presented five different ways in five different questions. Strikingly, each time, the majority of respondents rejected the argument. For example, 67 percent of voters said we should restrict how powerful AI models can become, even though that risks making American companies fall behind China. Only 14 percent disagreed.

Naturally, with any poll about a technology that doesn’t yet exist, there’s a bit of a challenge in interpreting the responses. But what a strong majority of the American public seems to be saying here is: just because we’re worried about a foreign power getting ahead, doesn’t mean that it makes sense to unleash upon ourselves a technology we think will severely harm us.

AGI, it turns out, is just not a popular idea in America.

“As we’re asking these poll questions and getting such lopsided results, it’s honestly a little bit surprising to me to see how lopsided it is,” Daniel Colson, the executive director of the AI Policy Institute, told me. “There’s actually quite a large disconnect between a lot of the elite discourse or discourse in the labs and what the American public wants.”

-via Vox, September 19, 2023

#united states#china#ai#artificial intelligence#superintelligence#ai ethics#general ai#computer science#public opinion#science and technology#ai boom#anti ai#international politics#good news#hope

201 notes

·

View notes